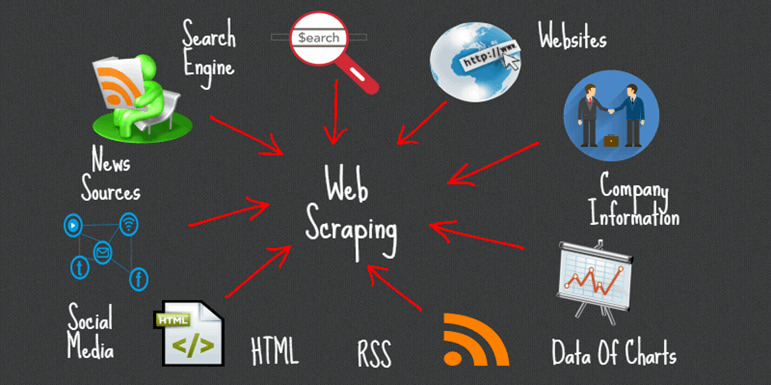

Web scraping is an essential tool for gathering valuable data from websites, but the process can be complex and prone to errors. Whether you’re new to scraping or an experienced developer, mistakes are easy to make, and they can Web Scraping Tool lead to inefficient data extraction, inaccurate results, or even legal issues. In this article, we’ll explore the top 5 web scraping mistakes to avoid in order to ensure better and more reliable data extraction.

- Failing to handle Dynamic Content Correctly

One of the most common mistakes in web scraping is failing to account for dynamic content, such as data loaded via JavaScript or AJAX. Many modern websites use JavaScript to dynamically generate or update content after the initial HTML page load. Traditional scraping tools like BeautifulSoup and Scrapy are designed to extract data from static HTML, but they can’t handle dynamic elements properly. To avoid this issue, you should use browser automation tools like Selenium or Playwright, which can render JavaScript and capture data as it appears on the page. Skipping this step will result in incomplete or outdated data, as the information you need may not be available in the raw HTML source.

- Ignoring Legal and Ethical Guidelines

Another critical mistake when web scraping is neglecting the legal and ethical considerations associated with data collection. Many websites explicitly prohibit scraping in their terms of service, and scraping too aggressively can lead to IP blocking or legal consequences. Ignoring these guidelines can put your project at risk of being shut down, or worse, facing legal action. To avoid this, always review a website’s terms and conditions before scraping and ensure compliance with relevant laws like the General Data Protection Regulation (GDPR). Additionally, respecting the robots. txt file of a website, which provides instructions on which pages can be crawled, helps ensure you are scraping ethically and responsibly.

- Overlooking Rate Limiting and Request Frequency

Sending too many requests to a website in a short period of time is another common mistake that can lead to blocking or throttling. Websites often have rate-limiting mechanisms in place to prevent excessive scraping, and sending too many requests too quickly can trigger these defenses, resulting in your IP address being blocked. To avoid this, make sure to implement rate-limiting in your scraping scripts by spacing out requests or introducing random delays between them. Using rotating proxies or VPNs is another effective strategy to distribute your requests across different IP addresses, minimizing the risk of being blocked. Taking these steps will ensure that your scraping is efficient without causing issues for the website’s server or triggering anti-scraping measures.

- Not Preparing for Data Cleaning and Parsing Challenges

Data collected through web scraping is often messy and requires significant cleaning and parsing before it can be used effectively. A common mistake is assuming that the data will be in a clean, structured format ready for analysis. In reality, you might encounter issues like missing values, inconsistent formatting, or irrelevant information (e. g., ads, navigation bars, or duplicate entries). It’s essential to plan for data cleaning during the scraping process by designing your scraper to target only the relevant content. Tools like Python’s Pandas library can help clean and structure your data, while regular expressions (regex) or CSS selectors can help extract specific pieces of information. Ensuring that your scraper outputs clean, usable data will save you time and effort later on.

- Failing to Monitor and maintain Scraping Scripts

Websites change frequently, with elements like HTML structure, Urls, or classes being updated regularly. A scraper that works perfectly one day may break the next due to these changes. Failing to monitor and maintain your scraping scripts is a significant mistake, as it can result in missed or inaccurate data. Regularly check the health of your scraping script and be ready to make adjustments when the website undergoes changes. Setting up alerts for failures or using logging systems to track the scraper’s progress can help you detect issues early. Additionally, automating the maintenance process with tools that adapt to website structure changes will help ensure that your scraper remains functional over time.

Conclusion

Web scraping is a powerful tool for data extraction, but it requires careful planning and attention to detail. By avoiding these common mistakes—such as failing to handle dynamic content, ignoring legal and ethical guidelines, overlooking rate limiting, not preparing for data cleaning, and neglecting regular script maintenance—you can ensure that your web scraping projects are successful and sustainable. By taking these precautions, you’ll not only improve the quality of the data you collect but also avoid technical, legal, and ethical issues that could hinder your scraping efforts. Ultimately, the key to successful web scraping lies in being thorough, adaptable, and mindful of the potential challenges that come with the territory.